일전에 포스팅한 버거스 방정식(Burgers' equation)에 대한 물리정보신경망(PINN, Physics-Informed Neural Network) Tensorflow2 코드를 업데이트했다.

버거스 방정식과 초기조건, 경계조건, 그리고 신경망 구조, 콜로케이션 포인트, 데이터 포인트 등은 모두 전에 사용된 코드와 동일하다.

차이점은 두가지다. 먼저 물리정보 신경망에서 \(u_t, u_x, u_{xx}\) 를 계산할 때 기존의 tf.GradientTape.gradient 대신에 tf.gradients 함수를 사용했다. 해당 코드는 다음과 같다. @tf.function을 사용해서 한결 간단해졌다.

@tf.function

def physics_net(self, xt):

x = xt[:, 0:1]

t = xt[:, 1:2]

xt_t = tf.concat([x, t], 1)

u = self.burgers(xt_t)

u_x = tf.gradients(u, x)

u_xx = tf.gradients(u_x, x)

u_t = tf.gradients(u, t)

return u_t + u*u_x - (0.01/tf.constant(np.pi))*u_xx

두번째는 옵티마이저로서 Adam 뿐만 아니라 L-BFGS 옵티마이저도 사용한 것이다. L-BFGS는 PINN의 기본 옵티마이저로 인식될 정도로 널리 사용된다. Adam 옵티마이저를 먼저 사용하고 이어서 L-BFGS 옵티마이저를 사용했다.

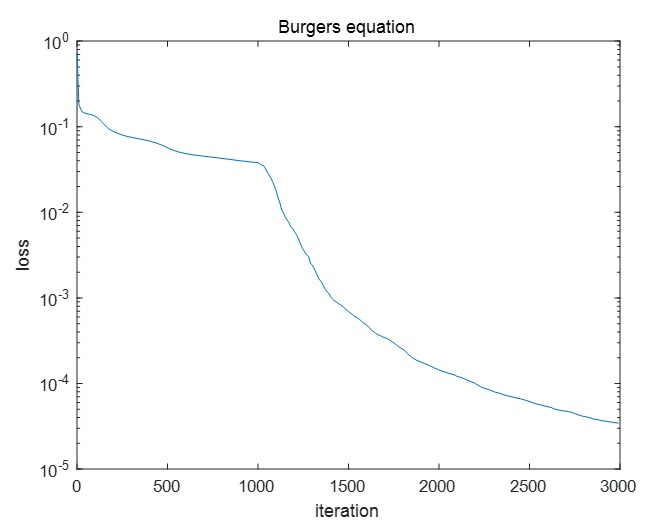

Adam 옵티마이저를 이용한 1,000번의 iteration과 바로 이어서 L-BFGS-B 옵티마이저를 이용한 2,000번의 iteration을 수행한 결과, 손실값은 다음과 같다. loss 값이 전보다 15/1000 배 작아졌음을 알 수 있다.

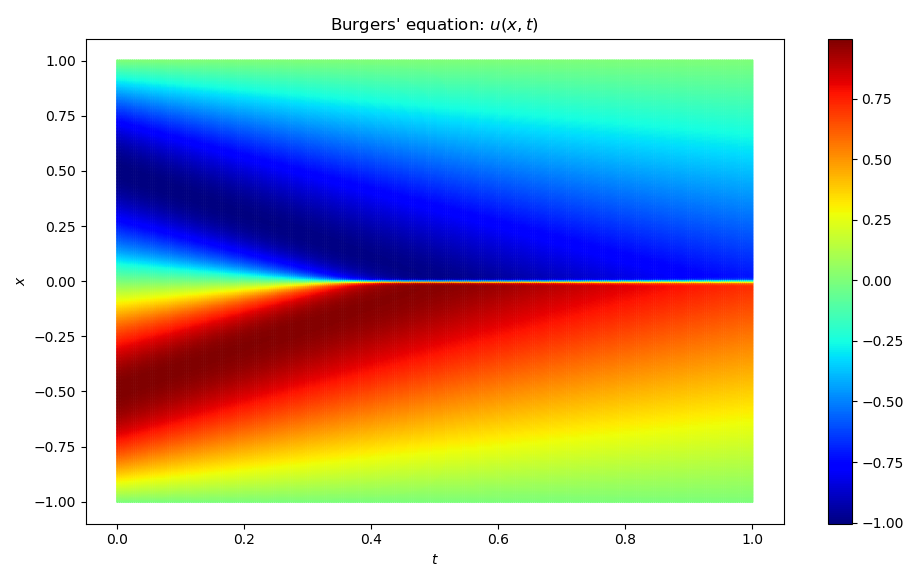

전체 계산 영역 \( x \in [-1, 1], \ t \in [0, 1]\) 에서 버거스 방정식의 해는 다음과 같다.

다음은 버거스 방정식에 대한 업데이트된 PINN의 전체 코드다.

burgers_model_lbfgs.py

# PINN for Burgers' equation (L-BFGS anf tf.gradient version)

# coded by St.Watermelon

import tensorflow as tf

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Dense

from tensorflow.keras.optimizers import Adam

import matplotlib.pyplot as plt

import numpy as np

import scipy.optimize

from time import time

from burgers_ic_bc_data import burgers_data

class Burgers(Model):

def __init__(self):

super(Burgers, self).__init__()

initializer = tf.keras.initializers.GlorotUniform

self.h1 = Dense(20, activation='tanh', kernel_initializer=initializer)

self.h2 = Dense(20, activation='tanh', kernel_initializer=initializer)

self.h3 = Dense(20, activation='tanh', kernel_initializer=initializer)

self.h4 = Dense(20, activation='tanh', kernel_initializer=initializer)

self.h5 = Dense(20, activation='tanh', kernel_initializer=initializer)

self.h6 = Dense(20, activation='tanh', kernel_initializer=initializer)

self.u = Dense(1, activation='linear')

def call(self, state):

x = self.h1(state)

x = self.h2(x)

x = self.h3(x)

x = self.h4(x)

x = self.h5(x)

x = self.h6(x)

out = self.u(x)

return out

class BurgersPinn(object):

def __init__(self):

self.lr = 0.001

self.opt = Adam(self.lr)

self.burgers = Burgers()

self.burgers.build(input_shape=(None, 2))

self.train_loss_history = []

self.iter_count = 0

self.instant_loss = 0

@tf.function

def physics_net(self, xt):

x = xt[:, 0:1]

t = xt[:, 1:2]

xt_t = tf.concat([x, t], 1)

u = self.burgers(xt_t)

u_x = tf.gradients(u, x)

u_xx = tf.gradients(u_x, x)

u_t = tf.gradients(u, t)

return u_t + u*u_x - (0.01/tf.constant(np.pi))*u_xx

def save_weights(self, path):

self.burgers.save_weights(path + 'burgers.h5')

def load_weights(self, path):

self.burgers.load_weights(path + 'burgers.h5')

def compute_loss(self, f, u_bnd_hat, u_bnd_sol):

loss_col = tf.reduce_mean(tf.square(f))

loss_bnd = tf.reduce_mean(tf.square(u_bnd_hat - u_bnd_sol))

loss = loss_col + loss_bnd

return loss

def compute_grad(self, xt_col, xt_bnd, u_bnd_sol):

with tf.GradientTape() as tape:

f = self.physics_net(xt_col)

u_bnd_hat = self.burgers(xt_bnd)

loss = self.compute_loss(f, u_bnd_hat, u_bnd_sol)

grads = tape.gradient(loss, self.burgers.trainable_variables)

return loss, grads

def callback(self, arg=None):

if self.iter_count % 10 == 0:

print('iter=', self.iter_count, ', loss=', self.instant_loss)

self.train_loss_history.append([self.iter_count, self.instant_loss])

self.iter_count += 1

def train_with_adam(self, xt_col, xt_bnd, u_bnd_sol, adam_num):

def learn():

loss, grads = self.compute_grad(xt_col, xt_bnd, u_bnd_sol)

self.opt.apply_gradients(zip(grads, self.burgers.trainable_variables))

return loss

for iter in range(int(adam_num)):

loss = learn()

self.instant_loss = loss.numpy()

self.callback()

def train_with_lbfgs(self, xt_col, xt_bnd, u_bnd_sol, lbfgs_num):

def vec_weight():

# vectorize weights

weight_vec = []

# Loop over all weights

for v in self.burgers.trainable_variables:

weight_vec.extend(v.numpy().flatten())

weight_vec = tf.convert_to_tensor(weight_vec)

return weight_vec

w0 = vec_weight().numpy()

def restore_weight(weight_vec):

# restore weight vector to model weights

idx = 0

for v in self.burgers.trainable_variables:

vs = v.shape

# weight matrices

if len(vs) == 2:

sw = vs[0] * vs[1]

updated_val = tf.reshape(weight_vec[idx:idx + sw], (vs[0], vs[1]))

idx += sw

# bias vectors

elif len(vs) == 1:

updated_val = weight_vec[idx:idx + vs[0]]

idx += vs[0]

# assign variables (Casting necessary since scipy requires float64 type)

v.assign(tf.cast(updated_val, dtype=tf.float32))

def loss_grad(w):

# update weights in model

restore_weight(w)

loss, grads = self.compute_grad(xt_col, xt_bnd, u_bnd_sol)

# vectorize gradients

grad_vec = []

for g in grads:

grad_vec.extend(g.numpy().flatten())

# gradient list to array

# scipy-routines requires 64-bit floats

loss = loss.numpy().astype(np.float64)

self.instant_loss = loss

grad_vec = np.array(grad_vec, dtype=np.float64)

return loss, grad_vec

return scipy.optimize.minimize(fun=loss_grad,

x0=w0,

jac=True,

method='L-BFGS-B',

callback=self.callback,

options={'maxiter': lbfgs_num,

'maxfun': 50000,

'maxcor': 50,

'maxls': 50,

'ftol': 1.0 * np.finfo(float).eps})

def predict(self, xt):

u_pred = self.burgers(xt)

return u_pred

def train(self, adam_num, lbfgs_num):

xt_col, xt_bnd, u_bnd_sol = burgers_data()

# Start timer

t0 = time()

self.train_with_adam(xt_col, xt_bnd, u_bnd_sol, adam_num)

# Print computation time

print('\nComputation time of adam: {} seconds'.format(time() - t0))

t1 = time()

self.train_with_lbfgs(xt_col, xt_bnd, u_bnd_sol, lbfgs_num)

# Print computation time

print('\nComputation time of L-BFGS-B: {} seconds'.format(time() - t1))

self.save_weights("./save_weights/")

np.savetxt('./save_weights/loss.txt', self.train_loss_history)

train_loss_history = np.array(self.train_loss_history)

plt.plot(train_loss_history[:, 0], train_loss_history[:, 1])

plt.yscale("log")

plt.show()

# main

def main():

adam_num = 1000

lbfgs_num = 2000

agent = BurgersPinn()

agent.train(adam_num, lbfgs_num)

if __name__=="__main__":

main()

burgers_ic_bc_data.py

# Setting initial and boundary conditions

# PINN for Burgers' equation (L-BFGS version)

# coded by St.Watermelon

import tensorflow as tf

import numpy as np

def burgers_data():

# set number of data points

N_b = 500 # boundary

N_t = 500 # initial time

N_c = 20000 # collocation point

# set boundary

xmin = -1.0

xmax = 1.0

tmin = 0.0

tmax = 1.0

# initial condition

initial_xt = np.linspace([xmin, tmin], [xmax, tmin], N_t)

initial_u = -np.sin(np.pi * initial_xt[:,0]).reshape(-1,1)

# boundary condition

boundary_up = np.linspace([xmax, tmin], [xmax, tmax], N_b)

boundary_up_sol = np.zeros((N_b, 1))

boundary_down = np.linspace([xmin, tmin], [xmin, tmax], N_b)

boundary_down_sol = np.zeros((N_b, 1))

# collection of initial and boundary condition

xt_bnd = np.concatenate([initial_xt, boundary_up, boundary_down], axis=0)

u_bnd_sol = np.concatenate([initial_u, boundary_up_sol, boundary_down_sol], axis=0)

# collocation point

t_col_data = np.random.uniform(tmin, tmax, [N_c, 1])

x_col_data = np.random.uniform(xmin, xmax, [N_c, 1])

xt_col_data = np.concatenate([x_col_data, t_col_data], axis=1)

xt_col = np.concatenate((xt_col_data, xt_bnd), axis=0)

# convert all to tensors

xt_col = tf.convert_to_tensor(xt_col, dtype=tf.float32)

xt_bnd = tf.convert_to_tensor(xt_bnd, dtype=tf.float32)

u_bnd_sol = tf.convert_to_tensor(u_bnd_sol, dtype=tf.float32)

return xt_col, xt_bnd, u_bnd_sol

load_eval_map.py

from burgers_model_lbfgs import *

import matplotlib.pyplot as plt

agent = BurgersPinn()

agent.load_weights('./save_weights/')

# data for validation

x_input = np.linspace(-1.0, 1.0, 300)

t_input = np.linspace(0.0, 1.0, 300)

x_data = x_input.reshape(-1,1)

uxt_res = []

for t in t_input:

t_data = t * np.ones(x_data.shape)

xt_data = np.concatenate([x_data, t_data], axis=1)

u_pred = agent.predict(tf.convert_to_tensor(xt_data, dtype=tf.float32))

uxt = np.concatenate([xt_data, u_pred.numpy()], axis=1)

uxt_res.append(uxt)

uxt_res = np.array(uxt_res)

uxt_res = uxt_res.reshape(-1,3)

# plotting

plt.figure(1)

plt.scatter(uxt_res[:, 1], uxt_res[:, 0], c=uxt_res[:,2], s=1, cmap="jet")

plt.colorbar()

plt.xlabel('$t$')

plt.ylabel('$x$')

plt.title("Burgers' equation: $u(x,t)$")

plt.show()

'AI 딥러닝 > DLA' 카테고리의 다른 글

| [U-Net] 망막 혈관 세그멘테이션 (Retinal Vessel Segmentation) (0) | 2022.05.11 |

|---|---|

| [U-Net] U-Net 구조 (0) | 2022.05.11 |

| [PINN] 비압축성 유체 정보 기반 신경망 (Incompressible NS-Informed Neural Network) (0) | 2021.11.02 |

| [PINN] 물리 정보 신경망 (Physics-Informed Neural Network) (0) | 2021.09.19 |

| [CNN] 컨볼루션과 상관도 (0) | 2020.09.22 |

댓글